Will it save us or destroy us?

Some thoughts on AI and personal agency.

“It is easy for me to imagine that the next great division of the world will be between people who wish to live as creatures and people who wish to live as machines.”

― Wendell Berry, Life is a Miracle: An Essay Against Modern Superstition

These days, part of my activist work includes learning and thinking about AI. I’m spending time talking to experts about how it works, and working in a few different ways and with a few different groups and coalitions. My goal is to make it work better for a particular audience, on a particular type of AI answer platform. Dipping my toe in the waters of AI was daunting, but now I’m finding the project incredibly compelling. The potential is enormous, and so are the stakes. Getting to know AI feels a bit like encountering alien intelligence that has recently landed on earth: fascinating, unfathomably powerful, generally poorly understood, and ambiguous enough that we’re not sure whether it’s here to help us or harm us — or both.

Over the past year, the rapid rollout of AI platforms and tools has come with a particular kind of queasy futility. It feels like the technology has finally taken the drivers seat, as we always feared it would, and that the people in charge of building it are speeding ahead, detached and out of touch. We’re offered clumsy home robots, insufferable chatbots and oceans of generative slop as the world burns. We’re told which jobs AI is “coming for,” we see billboards encouraging bosses to replace workers with high-functioning virtual assistants. Politicians shrug, throwing up their hands against any role or responsibility. When exactly did we get here, and how did it happen so fast?

I wouldn’t say I’m a full techno-optimist, but I usually find reasons to be hopeful in the face of struggles with new technology, and I always look for ways to feel empowered. My view on technology hasn’t changed much over the years: tech is not inherently good or evil. It’s a powerful tool, one that reflects a wide set of human priorities, incentives and values, an extension of ourselves and our consciousness. It has expansive potential for outcomes in all directions, and the future depends on how we shepherd and use it.

We made the tech, and despite what we’re often told, we still have some choice in how we live alongside of it.

But stewarding something this powerful requires clarity. We have to ask questions: Who is it serving? Who is it harming? What incentives are shaping it? And we need to be willing and able to articulate boundaries that reflect our values—even when the rollout of these technologies and their current political encasements make the trajectory feel inevitable.

Defining the “we,” of course, is tricky. Our current society is so deeply polarized, it’s hard to imagine a large enough group finding alignment, but that’s exactly why this moment matters. AI has arrived, but its norms are not fixed. There’s still an opportunity to participate proactively instead of reactively in what we want and don’t want from it.

Right now, three groups are clearly emerging: people who build and sell AI, people who use AI, and people who experience its impacts including real or potential harms. The builders and sellers are decision-makers who seem to sit far above the rest of us, insulated by technical expertise, enormous capital and a sense of urgency fueled by competition. They’ve raised billions and are racing toward the milestones they promised to investors. A few are in bed with politicians. If the public wants a say in what happens next, we’ll need to be organized—and loud.

Sometimes AI companies like to imply that intelligence itself dissolves accountability. When they describe the “black box” forming answers to people’s unique questions, they act as if no one engineer, project manager or CEO could be responsible for what pops out in response: the answer is a synthesis of Internet content, it’s ALL of our responsibility, or it just is what it is.

But accountability doesn’t dissipate when software gathers, constructs and even “thinks:” it moves to the people who design, train, deploy and profit from it. The companies may feel untouchable now, but that’s because we’ve spent decades treating tech founders like celebrities and loosening regulatory guardrails in the name of innovation. Public pressure still matters. Policy still matters, and boycotts and strikes still matter.

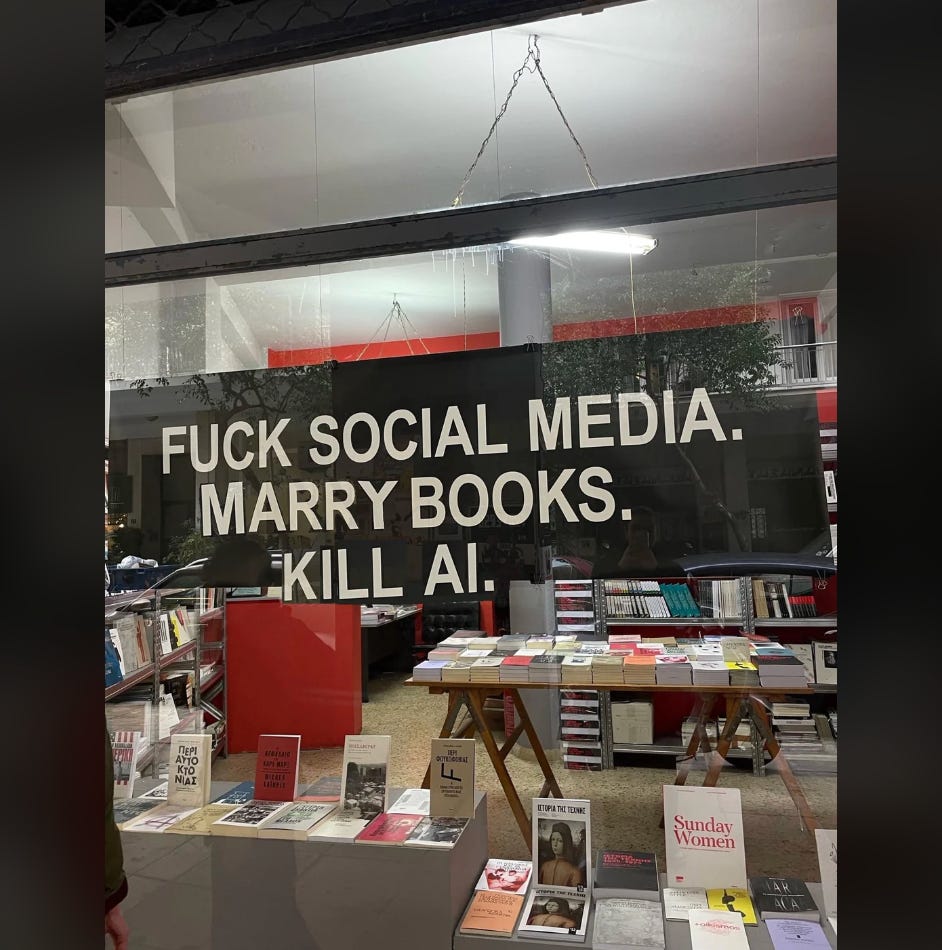

And I already see the public pushing back.

Nobody asked for this. (A reaction to new features that use excessive resources or threaten humanity’s unique gifts and roles.)

You don’t get to decide for me. (A feeling that there is an undue amount of power at the top, more or less deciding the fate of humanity.)

Kill it. (A sense that there are no viable ways forward, we’re doomed to self-destruct unless we commit to the most extreme way to reclaim control.)

There are so many valid reasons for resistance. We haven’t had time to build trust with this technology, and we don’t trust the vested interests at the top. We know these systems hallucinate, fabricate, and occasionally behave in disturbing ways. We see robots failing basic tasks while data centers devour energy and creative work is absorbed without consent. We’re watching companies build infrastructure and power on inflated valuations, before actual value has been proven out. We’re watching a water crisis unfold faster than the technology can solve it. There are plenty of reasons not to trust.

My focus right now is specifically on answer platforms. By the numbers, a substantial percentage of the population are already using the tools — Google’s AI summaries at the top of search results are seen by around 2 billion people a month, and ChatGPT claims 900 million users a week, or nearly 3.5 billion users a month (up from 3 billion just last summer).*

This uptake is massive, but also not surprising: the experience of getting information via direct, conversational answers to complex questions, answers that are based on large swaths of the internet, is a genuinely new and often better experience than what we had before. For many, it’s more intuitive and humane than traditional search, which required us to comb through suggested website urls, SEO nonsense and paid ads to find the same answers.

In practice, AI answer platforms can feel miraculous. In our house we’ve used it to ask gardening questions and get instant and wise planting advice. We’ve brainstormed script ideas for our sci-fi band (probably the most appropriate use so far). We’ve used it to troubleshoot code, and we’re given fast solutions with context. Many of these experiences feel closer to asking a knowledgeable friend than consulting a search engine, enhancing us instead of getting in the way. That feeling matters — and it helps explain why adoption is speeding up regardless of public discomfort or tangible environmental impact.

In a recent pod conversation between author and tech critic Tobias Rose Stockwell (who is also an old friend of mine) and Tristan Harris of the Center for Humane Technology, one idea stuck out: at this moment, AI contains both positive infinity and negative infinity, stretching out in both directions. All the best outcomes are still possible, and so are the worst. It could just as easily carry us into an abundant egalitarian utopia where disease is cured, natural resources are preserved, no one has to labor and creativity is king, as well as it could drive us into climate catastrophe and technofeudal control. Liberation or destruction, it’s all on the table right now — pure potential.

What a wild moment to be a human.

People have all sorts of feelings about it — fear, excitement, dread — but regardless of how we feel, it has arrived and there’s no putting it back in the box. There’s no alternate reality—at least not that I know of—and I also don’t feel the least bit surprised that this is where we have found ourselves. We’ve been moving in this direction for decades, treating technology as an extension of our minds and bodies, figuring out ways to weave it into our daily lives. Our movies have shown us a robotic, agentic future. Our algorithms have gotten closer and closer to consciousness. And now here we are.

Some people have another way of thinking about AI’s inevitability, by theorizing that AI already existed and has always existed — all we’ve done recently is discover it. Like a mycelium network just beneath the soil, this is based on the idea that intelligence is not something that can be created: instead, intelligence emerges when the right energy and creativity is applied, like developing an understanding of math or markets. Today’s AI is built on our own language models, logic, learnings, and what changed was that we finally created the tools to reach this particular form of intelligence and learn how to host it. Mind-bending.

Whatever you believe, humans built this thing by running full steam ahead with hardware and software innovation for almost a century, because they could. Humans are curious, and ambitious, and this set of technologies kept feeling good in the dopamine receptors. Doesn’t that make it feel sort of inevitable, when you step back and look at how we as humans typically do things?

Now, the technology mirrors our own consciousness and capability closer than ever before, which inevitably raises uncomfortable questions: are we ultimately trying to replace or augment ourselves? What is today’s value of human vs. machine? Will we see it in time? Will we agree?

I suppose in that alternate reality, in a perfect world, we would steward this tool slowly and collectively, at a responsible pace perfectly supportive of human thriving while decoupled from capitalist incentives of money and power. We would train it to do the jobs that are unsafe and undesirable, freeing people to pursue their more fulfilling activities and relationships. We would prevent and correct all exploitative uses, like deepfakes and non-consensual porn. We’d solve natural resource consumption before it actually started over-consuming resources. We’d balance ownership so all people could have a voice in its design. We’d have blockchain technology in place embedding intellectual property credits into every online artwork and prose before it gets absorbed by the machines.

In a perfect world we’d prioritize planetary and social well-being alongside innovation. AI would lift us up, solving our toughest problems and accelerating us toward our utopia of peace and health and well-being.

But that doesn’t sound like it tracks with human history, does it? History suggests something messier. Instead we bumble our way through, swinging hard toward what we think is progress, until we’re forced to backtrack and pivot before we don’t blow it all up. See my pendulum theory idea here.

Corporations have a history of seizing on innovation and racing toward growth. Sometimes they get inspiration from sci-fi storytellers themselves, who come up with the shape of a device or the aesthetic of a thinking computer first, another trippy oroborous-snake-shaped creation story. Policymakers scramble to catch up, slapping up guardrails in response. AI feels like the latest swing of that pendulum — except faster, bigger, and more consequential than anything that’s come before.

A few of the big AI companies have expressed that they want to win the race so they can protect humanity and steward the technology correctly, essentially saying we’re the good guys. we need to get there first, so we can do it right. But as usual, no one can predict how it will all play out and who to trust. Instead this raises more urgent questions, like how do we hold corporations accountable when their incentives to maximize revenue conflict with public good, and the politicians are in their pocket? What does “protecting humanity” even mean in this context? Who decides?

Humans are all about learning from their mistakes, so here we are: compelled by the prospects of AI, teetering on the edge of losing control to it, burning through resources in the process of finding something new. As with social media and online ad businesses before, business incentives or motivators — like product dominance, ROI and control of the market — tend to be fundamentally misaligned with those of general public, which in the case of AI answer platforms means accurate, propaganda-free, ad-free information at low cost, the ability to opt-out and to stay digitally safe, to name a few.

My own work with AI is grounded in information hygiene. As I study how these systems answer questions on abortion access—what they say, what they omit, what sources they rely on—I think about the end user who needs and deserves complete, accurate information. I think about the pure potential of answer tools to serve up judgement-free, factual responses to complex questions. I think about the life-altering consequences of these answers as a person decides what to do next.

In health, AI is equipped to become a reliable, valuable resource by consistently answering questions on complex topics like reproductive health — more weighty in some ways than other health queries because of cultural stigma, relationship dynamics, fear of repercussion or legal risk. They could answer like only a robot would: maximally factual and evidence-based. Thorough yet clear, with learned compassion.

But right now this isn’t quite the situation. The current answer platforms often misrepresent reality, getting facts on available abortion clinics wrong or leaving out pills by mail because of confusion about legal risk. One of the ways we’re experimenting with to improve these answers is from the bottom up, seeing what happens when you improve the answers on the websites AI is reading, to better answer the question. Our simple theory is that if you clarify the high-authority sources of truth, and the answers will improve. We’re giving direct feedback on the platforms, which may sound like screaming into the void but at this point we believe there are still humans on the other end, responsible for taking in the feedback and making the product better. And we’re starting conversations within AI company’s trust and safety teams to explain what we’re seeing, why answers are getting derailed, and how they can participate in making answers better without getting derailed themselves by the politicized environment.

My issue area is far from the only one affected, but it’s especially acute because the person asking the question might be standing at a major life fork in the road, with AI serving as the next guidepost. If they see one answer, they go one way. If they see another more constricted, fear-driven answer, one that tells them they have limited or no options for ending a pregnancy, their life may change course against their will.

I’m hopeful that we can improve things, because we’re already seeing shifts in the quality of answers. When AI systems are grounded in better inputs, they perform better. This isn’t miracle-making, it’s stewardship. It also feels like we have a limited window to exert this kind of influence and build internal collaborations, before the tools and their heirarchies of power get too ingrained.

I’m enjoying the work because it allows me to get to know the tool before developing a hard fast moral judgement — is this tool Good or Evil? — by playing with its capabilities and getting to know the alien being. Then, spinning out best-case scenarios of how it can improve the online search for information, giving us the best possible experience of today’s ridiculously robust internet.

I’m also experiencing AI’s risks and pitfalls firsthand — namely misinformation, political influence and lack of control. Not to mention grappling with the existential and very real impacts of data centers and climate warming, AI slop replacing artist content, humans losing their ability to synthesize and process.

I understand the instinct to opt out entirely. On work calls, representatives from other repro organizations sometimes say to me with a wave of their hand, I don’t like AI. It scares me. And I get it.

But AI is here, whether we like it or not, and people are apparently using it by the billions. People searching for health answers certainly are — apparently 25% of GPT questions are around health. By refusing to give feedback or work on improvements, I might make a statement by distancing myself from AI companies but I wouldn’t be helping the people across the US who are already using it, people who need accurate answers on how to get help and be okay.

Not everyone has to embrace AI or to ride the wave. But those who choose to stay out of the water altogether, avoiding the phenomena of its uptake, capabilities and pitfalls, watching from the shore instead, are letting others dictate what happens next. Disengagement doesn’t stop the technology, it just cedes influence to the people already shaping it — people who are often the ones most likely to benefit. At the moment it’s hard to know how to tap in and influence, how to voice an opinion, but this is part of my research. I’m hoping to figure out what think-tanks, advocacy groups and organizations are being formed around AI stewardship and what trust and influence they might build.

Meanwhile, I’m informing my own ethical stance by staying in the tension: learning, experimenting, criticizing, and imagining alternatives. Holding contradiction. Paying attention to power, resources, labor, and control. Watching for more ways information could get skewed, like the inevitable introduction of ads, or the risk of thought-policing and ideological control we’re already seeing in the media.

I’m also watching closely as the economics of this technology rapidly unfolds: is it a boom, or a bubble? In classic silicon valley style, have these new technologies been massively overvalued, overpromising immediate monetization? Will they initiate another crash similar to the early Internet boom and bust, when reality gets reconciled with promises of usefulness and growth eventually deemed impossible? And will that be a distillation in itself, forcing us to answer the question of who is in control by seeing which companies remain?

Either way, the outcome won’t be neutral.

These are complex challenges, ones that require holding contradiction, holding many partially-conflicting ideas to be true at once: the inevitability, the benefits, the harm, the unknowns. We are collectively being called right now to create broader definitions of success in society, definitions more expansive than growth and dominance. It’s time for systems that account for people, planet, and responsibility — not just profit. And we need far more public imagination and participation in deciding what kind of future we’re building.

For now, I’m in it: researching, writing, thinking, experimenting — trying to meet the moment with curiosity instead of fear, engagement instead of avoidance.

If you’re thinking about this too, I’d love to hear what you’re reading, listening to, or wrestling with. And if you know people working on AI answer platforms, I’d love to meet them.

*This number may be inflated as it counts anyonymized/incognito questions as individual users.

Header photo by Olli Kilpi on Unsplash

No audio track this week; back next time.